Concurrency in Go: shared memory

I’ve been playing around with some examples to better understand how Go’s memory model behaves on concurrent programs. I’m going to try and explain what I’ve learned regarding operation ordering on multi-core CPUs.

The example I’m going to show is an extension of the Message Passing example written by Russ Cox in his Hardware Model post. After reading it I wanted to experiment with this behavior and see it for myself. This is the result.

Alice and Bob in the office

Alice and Bob share an office. Their shifts sometimes overlap, but they often start and end at different times. They go in, do their work, and leave. However they have a rule: the last one to leave has to turn off the lights. The problem is that Alice can come very early and leave before Bob has even arrived, so when Bob leaves, he doesn’t know if Alice already left of hasn’t arrived yet. They came up with a system to let each other know that their work is done: they place a flag on their side of the office when they leave. This way either one of them, before leaving, can turn the lights off and put the flags down if they see that the opposite flag is up.

Let’s model that in code:

type Worker int

const (

Alice Worker = iota

Bob = iota

)

type Office struct {

// 0 => Alice's flag

// 1 => Bob's flag

flag [2]bool

lights bool

}

func (f *Office) Work(w Worker, wg *sync.WaitGroup) {

// Do work

// ...

// Set your own flag up

f.flag[w] = true

// If both flags are up, turn the lights off and put the flags down

if f.flag[w] && f.flag[1-w] {

f.lights = false

f.flag = []bool{false, false}

}

wg.Done()

}

For the sake of the narrative, let’s ignore the

sync.WaitGroupfor now. The point of this exercise is to highlight where Go synchronous model breaks, so we can better understand how (and why) concurrency-safe code is built.

The code is straight forward. Given that an Office starts its day with lights on, after all workers ran Work, Office.lights should be off.

Logically, there can only be three cases:

| Outcome | Cause |

|---|---|

| Alice turns the lights off | Bob’s goroutine finishes first |

| Bob turns the lights off | Alice’s goroutine finishes first |

| They both turn the lights off | They simultaneously raised their flags |

Given that the goroutines are scheduled fairly, there is an interleaving set of operations that can be seen as sequential from an external perspective. Every event happens in a total global order. This makes the third case possible. In a sequentially consistent environment, there can’t be a fourth case where no one switches the lights off.

For Bob to turn off the lights, we assume that Bob is the last goroutine that reaches the if statement. We know for sure that f.flag[Bob] is true because it was just set in this goroutine. In order for Bob to not turn off the lights, f.flag[Alice] has to be false, which means Alice mustn’t have finished her work, so Bob isn’t the last person in the office: a contradiction.

Let’s see this in action with a test:

func Test_WorkingDays(t *testing.T) {

office := new(Office)

// Lights on in the beginning of the day

office.lights = true

wg := sync.WaitGroup{}

wg.Add(2)

go office.Work(Alice, &wg)

go office.Work(Bob, &wg)

wg.Wait()

if office.lights {

t.Errorf("lights shouldn't be on after both finished their work")

}

}

When ran individually, the test will most likely pass. But there is a bug in our code. It may be obvious that we should use concurrent structures, like channel, mutex, atomic operations or wait groups to correctly indicate that their workday is done and lights should be off. However, it was not obvious to me at first why this code is wrong, and what problems can arise if kept like this.

To highlight the problem, observe what happens if I run this test a million times:

$ go test -count=1000000 .

--- FAIL: Test_WorkingDays (0.00s)

mem_test.go:49: lights shouldn\'t be on after both finished their work

--- FAIL: Test_WorkingDays (0.00s)

mem_test.go:49: lights shouldn\'t be on after both finished their work

... (x15)

FAIL

FAIL github.com/fredrb/concurrency/memory 10.114s

FAIL

This test failed 17 times but passed 999983 times. How could that be?

Disclaimer: there might be parts of this that I don’t fully understand, but I will try to explain this the best that I can.

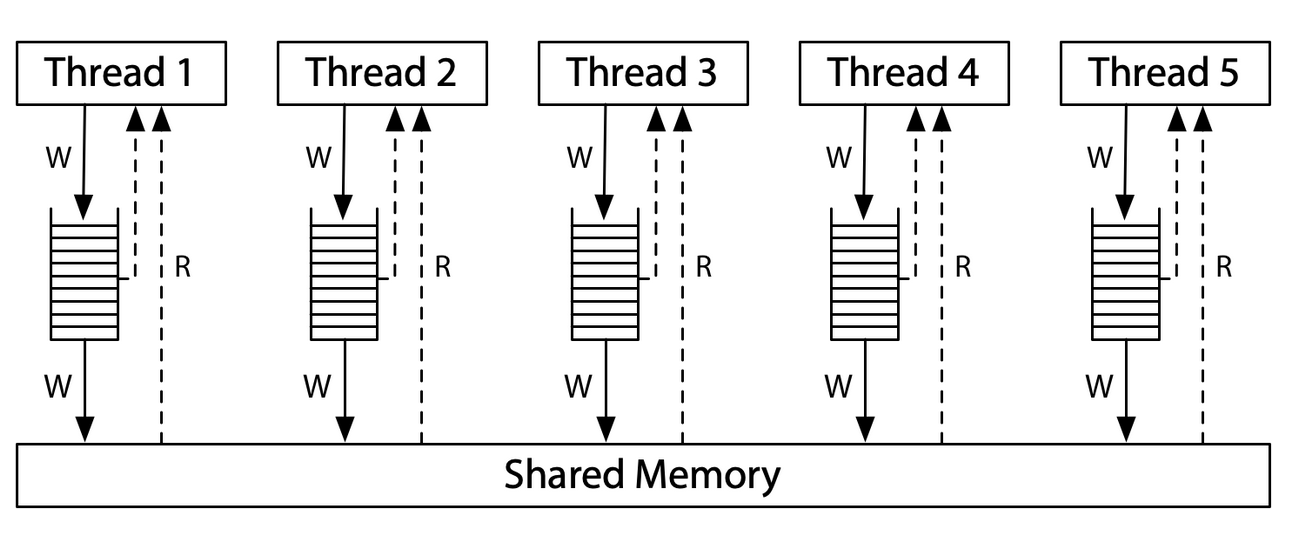

The main reason comes down to multi-core CPU’s caching data before synching with main memory. The behavior (and reasons to why this happens) might vary in different architectures. In x86 there is a buffer write queue for each thread that is accessing the shared memory. Whenever a thread has to read from the shared memory, the value is first read from the queue. Meaning that a thread sees its own writes before others do.

|

|---|

| Russ Cox: Hardware Memory Model post |

If both goroutines are scheduled to different CPU cores, the following memory model is valid, albeit unlikely:

| Thread 1 | Thread 2 | |

|---|---|---|

| Thread local data | flag: [ true, false ] |

flag: [ false, true ] |

| Don’t turn the lights off | Don’t turn the lights off |

This symptom can be caused if:

- The write operation was not propagated and it sits in the write buffer of the opposite thread

- Write operations were applied to main memory, but the a thread reads data from its cache instead

Modern CPU architectures don’t provide a sequentially consistent environment. Making the fourth case possible for this example: no one turns the lights off.

A fun way to prove that the CPU is the culprit is to run the same amount of tests with only a single CPU:

$ go test -cpu 1 -count=1000000 .

ok github.com/fredrb/concurrency/memory 6.824s

You can bring that number up the tests will always pass if there’s a single CPU. This is because all goroutines are scheduled to the same OS thread.

Fixing the program

We want our program to run on multiple cores, so we need to fix this issue. Luckily, the fix is rather trivial. In concurrent programming there is a concept called memory barrier or memory fence, which is used under the hood by Go’s concurrency APIs to synchronize CPU’s cache and the main memory. Go has an amazing first class support for concurrent operations. There are many ways we can solve this problem, but the most straightforward way to use atomic operations on integers instead of booleans locks.

Edit: as it was pointed out to me, the original solution I proposed below still has problems. Even thought the tests will pass, there is a race condition by setting shared variables in a goroutine – even if no other goroutine is active. Plus there is a risk that the data updated inside the if block won’t be seen by other goroutines since there is no synchronisation mechanism in place:

func (f *Office) Work(w Worker, wg *sync.WaitGroup) {

defer wg.Done()

atomic.StoreUint32(&f.flag[w], 1)

if atomic.LoadUint32(&f.flag[w]) == atomic.LoadUint32(&f.flag[1-w]) {

// DATA RACE:

f.lights = false

f.flag = [2]bool{false, false}

}

}

I tried to be clever. Don’t be clever.

Here’s a better solution to the problem using a mutex:

type Office struct {

flag [2]bool

flagLock sync.Mutex

lights bool

}

func (f *Office) DoWork(w Worker, wg *sync.WaitGroup) {

defer wg.Done()

// Do work

// ...

f.flagLock.Lock()

defer f.flagLock.Unlock()

f.flag[w] = true

if f.flag[w] && f.flag[1-w] {

f.lights = false

f.flag = [2]bool{false, false}

}

}

The individual run might be slower, since memory barriers are added before the store and load operations, but that’s negligible for our example.

Further reads

If you find this interesting and wants to read more about the topics, I’ve used the following resources to understand the behavior of my program:

- Russ Cox Memory Models series: https://research.swtch.com/mm

- Go’s memory model entry: https://go.dev/ref/mem

- The Art of Multiprocess Programming (book): Chapter 7. Even though this chapter is specifically about locks, it offers some examples that are pretty close to the office example I showed above.

If you hated this post, and can't keep it to yourself, consider sending me an e-mail at fred.rbittencourt@gmail.com. I'm more responsive to positive comments though.